Last weekend I had the pleasure to equip my brother’s computer with a new 4GB RAM module. Just out of curiosity I thought I would use this opportunity to test the rarely-used PXE-boot installation, which already served memtest86+ v4.20.

As you might have guessed, I probably would not be writing this blog post if it would have went through flawlessly ![]() In other words the test failed and a journey of debugging several (unrelated) problems began.

In other words the test failed and a journey of debugging several (unrelated) problems began.

The first thing I wanted to see is whether memtest86+ in general works. Hence, I immediately rebooted my Lenovo T440s in order to see that PXE-boot would not work at all. Fiddling around with the dnsmasq configuration I tried to make PXE aware of UEFI systems by adding the following

pxe-service=x86-64_EFI,EFI,efi/syslinux.efi,1.1.1.1 pxe-service=x86PC,Standard,pxelinux,1.1.1.1 |

where 1.1.1.1 of course stands for your tftp-server’s IP. Unfortunately this did not bring the success. It shall be noted that the tftp-server has to serve two files, once pxelinux.0 for the classical BIOS boot process and once syslinux.efi.0 (the 0 is automatically appended by dnsmasq). According to the syslinux documentation it is advisable to create two separate directories for the binaries as they both come with their own set of *.c32 files. As all this did not bring me closer to a working UEFI-PXE-boot setup I dismissed this plan and forced my notebook to boot in BIOS-mode.

Falling back to the BIOS-boot PXE worked as expected (Thinkpad bug?), but memtest86+ only showed a blue screen with a few pieces of information. Given the age of the initial PXE-configuration I thought that updating memtest86+ would be a good idea and quickly fetched the latest sources of version 5.01. Building was not a problem in a standard linux environment, although forerunners of immature code were already visible when make tried to install memtest86+ via scp to a hard-coded IP when executed with the target “all”. After removing the .bin prefix from the memtest executable it almost worked, but crashed immediately after starting testing. Having noticed that the makefile was already in a bad shape I should have come to this conclusion earlier, but after some time I started searching the databases of linux distributions which quite often ship patches for (almost) unmaintained software. And indeed, e.g., Fedora offers a set of patches that fix these problems. Applying the patches and deploying the new memtest then finally gave the first positive result of that day: a working memtest86+ 5.01 on my T440s ![]()

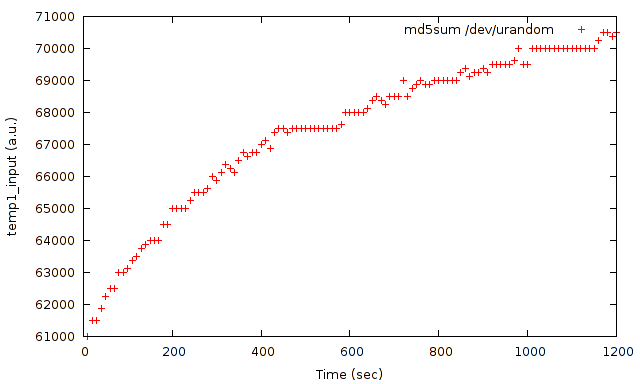

Back to the original problem, the said 4GB module, which was suspected to be faulty. I was immanently disillusioned that the failure was not due to memtest86+. In hindsight, I have to agree that this was a bit optimistic. Therefore, I bisected the problem and noticed that it was not even specific to that particular module. Realizing that the said mainboard (Asus M4A89GTD PRO) was a piece of hardware with advertised overclocking capabilities, the next step was to look for a smoking gun in that direction. And indeed, I got suspicious about the DDR3-RAM operating at 640 MHz instead of supported 666 MHz and a voltage slightly above 1.5V. A BIOS update then finally turned things to the good (maybe it even just resetted to the default values) and memtest86+ passed. The frequency is still reported to be around 640 MHz, but now it is at least working.